"No One Ever Cried At A Website"

Do people really think this? Do they accept it as a truth? Is it even true? Has there yet been the one case that renders the statement redundant? Surely, a rainy autumn day, a middle-aged mom, tired and emotional, suddenly overcome by the sight of some elegant bevelling...? It must have happened a million times.

It was Evan Boehm who reminded me of this hateful aphorism returning from the Resonate conference in Belgrade. I muttered it to myself, under my breath, for weeks after that. It troubled me. What the statement implies is that there is no beauty in digital technologies. No pathos. Nothing that might move someone. Which is utter bollocks. There isn't anything intrinsically un-profound in our latest batch of technological marvels, and it is hopelessly shortsighted to suggest there is. But I understand the reasons for this misconception, which is what I'm going to discuss below.

Was there ever a point in Rodin's career when he threw his tools to the ground and sobbed that no one had ever cried at a lump of rock? Or did Muybridge or Méliès ever think that no one could be moved by rapidly flickering light? If they did, they didn't let it stop them. I think it's time we too stopped beating ourselves up about where we stand in the history of our digital tools. The conversation should not be about whether someone could be moved, but how we might move them.

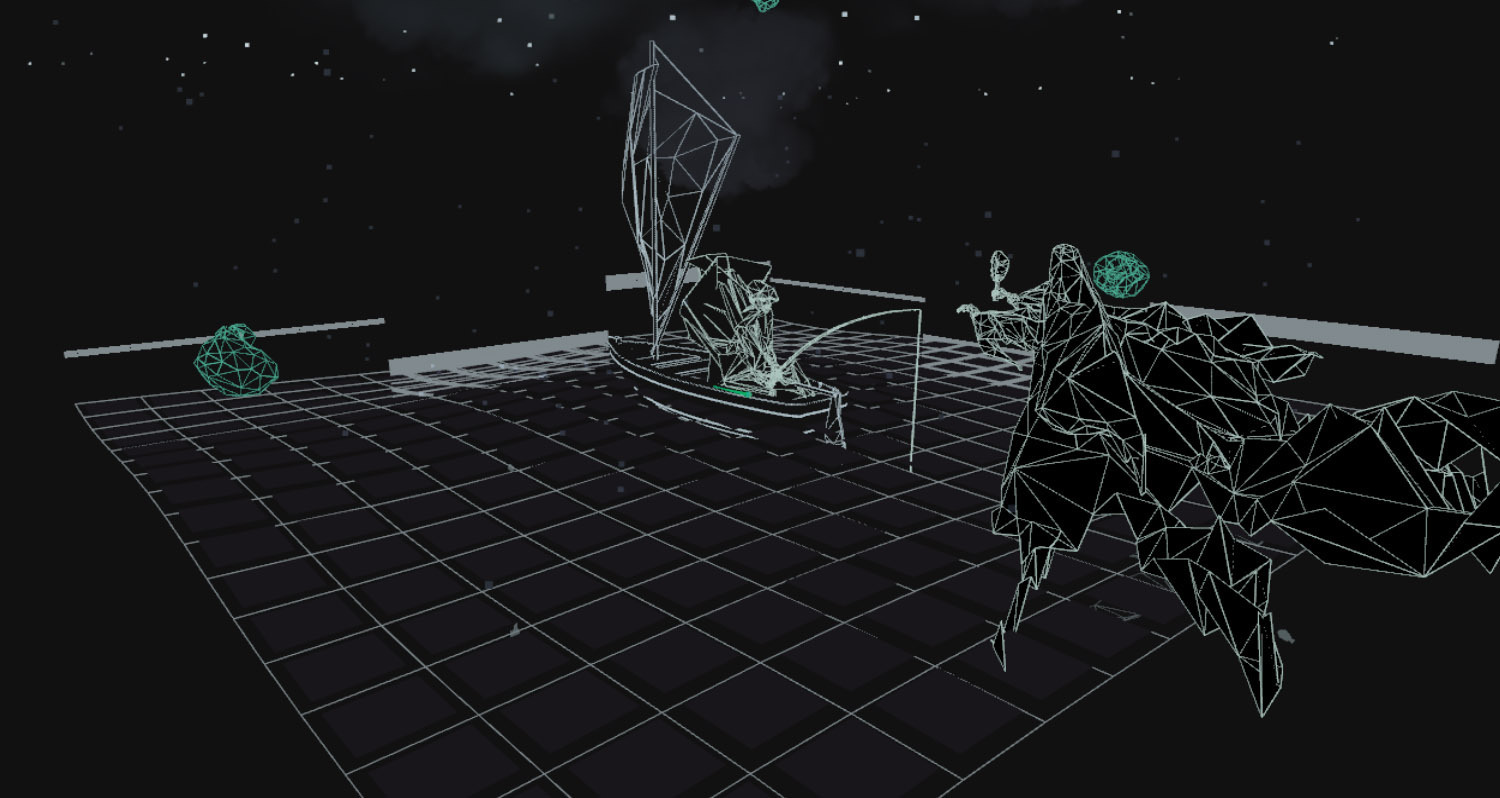

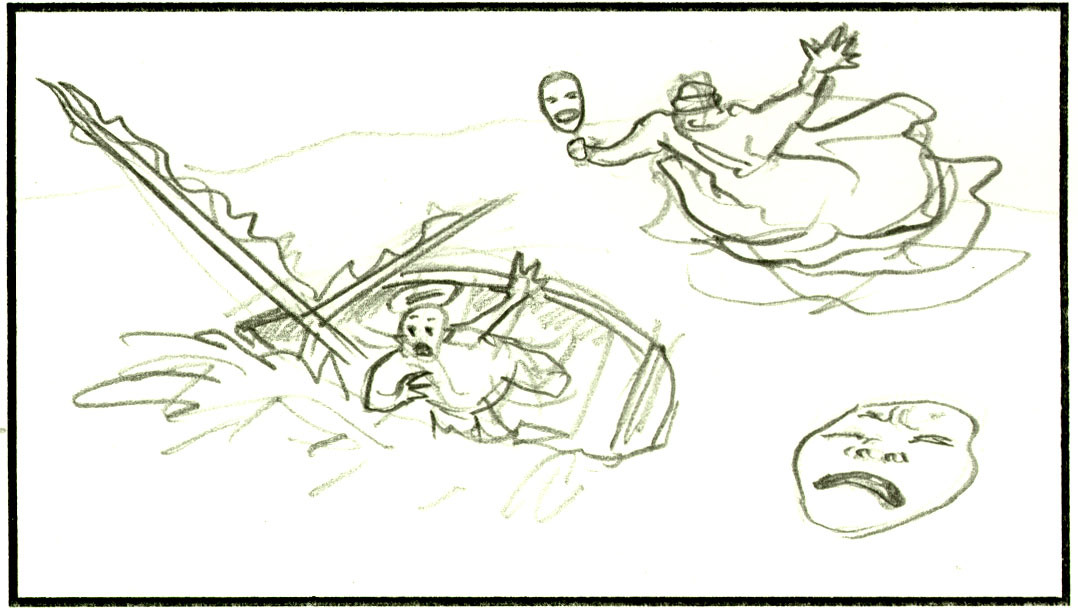

Evan addressed this question with The Carp and The Seagull a beautiful, melancholy browser experience, that gracefully walked that uneasy tightrope between interaction and narrative. On launch there was much wow-ing over the technology behind the piece, WebGL, but what I loved most about it was its boldness; its shameless attempt to aim at the heart – using only vector graphics and mouse. It neither celebrates nor apologises for its technology, it just tells its story in the best way it can.

It may be telling that this work did not start out as a WebGL piece. Through the two-year lifespan of the project it went through various forms, from short story to linear animation to interactive. The technology was not at the core of the idea. It wasn't about WebGL and what can be done with it, even if, inevitably, much of the attention it received was because of this.

This is the nub of the problem. Too much attention is awarded to the mechanisms of digital art, rather than the message or intent of the work. Yes, these new technologies are terribly exciting, with the new possibilities for expression that are being opened up, but that shouldn't be an excuse to label every tech demo we knock out as "art". Art requires a little more than that. Not much, but a little.

In these shifting times the programmers seems to have a continual problem deciding what they should call themselves. "Digital Artist" seems to have become the current job title. Two years ago they were all "Creative Technologists". In 2010, "Code Ninjas". Roses by other names, all smelling just as sweet. Next year they'll be "Imagineers", "NeoArchitects", "Exploraticians", or whatever jargon-du-jour the culture has farted into the lexicon. It's not their fault; they have no idea what they do for a living. No one does. They've all suffered trying to explain it to their mum, or a crowd of non-geeks at a party. This may be why geeks don't go to many parties.

But that doesn't mean that, in the absence of any better category, they should always be allowed to call their latest experiment "art". It's something we often tend to do with things that have no apparent use but are kinda pretty. Digital Art already has enough enemies; it doesn't need its own practitioners sullying the name with misuse.

Evan's work earns the "art" label, not because it's technologically interesting, but because it's conceptually interesting. If you don't give any thought to the meaning or emotional involvement of the work, it's not really art. This emotional involvement, if you believe the hypothetical aphorist Evan quotes, is the thing that is lacking from digital technologies. And I'll concede that if you see a long parade of tech demos masquerading as art you might start to believe this. But it's not true.

Looking at our current favourite mediums, there are three commonly employed emotional trigger-mechanisms:

- character identification - feeling what another feels, i.e. empathy

- sheer beauty – a more abstract, ineffable, gut response; and

- interaction – the ability to make a connection, to invest a part of yourself in something.

The first is probably the most widely used. It is key to mediums such as books and cinema. I'm sure even the coldest hearts reading this will admit to having, at some point, been absorbed by a film or novel; having been carried along by a narrative. Unless you are a practitioner (i.e. a novelist or film-maker) your attention is effortlessly taken beyond the mechanism of the medium. Most (I say most) have no problem burying any obsessive assessment of the form far from their conscious enjoyment.

With digital technologies the primary obsession is with how it works. It is only when you put a digital theorist in front of a painting or a ballet that they may be in danger of suffering the second category of emotional trigger - being punched in the gut by sheer beauty. Again, I think technology presents no barrier to this response either. Generative art, for example, is still a young medium, but has already produced many works of staggering beauty.

The third category, interaction, is perhaps the most contentious. The march of the digital age has so far been pushing towards increasingly non-passive experiences, which is why it's a core part of many technologies. Culture is no longer something behind a velvet rope or glass case, it is to be touched and steered and danced with. Technology is an enabler here - think of Cornell's boxes (intricate, tactile items designed for interaction), which we can no longer touch because they have become too valuable. Today we make digital interfaces designed specifically for the great unwashed to prod and poke at with their fat, sweaty fingers.

The Carp And The Seagull plays with interaction as a form of involvement. The interactions are not particularly sophisticated, but they are enough to give a sense of control over how the scene is playing out. We nurture the narrative Tamagotchi-style with subtle clicks and swipes.

When you present a work on the web you are dealing with a greatly shortened attention span – you have minutes at best, before your viewer clicks away to something else – so standard linear narratives aren't ideal. This is where a little interaction works, just enough to give the user a bit of an investment. Take interaction too far, overuse it, and the experience becomes too open, too user defined, and the authorial voice is lost. Contrary to what those in marketing may have you believe, not all users want to be able to "create their own stories". This is because they're not all coked-up control-freaks like the agency wankers who spout this shite. Some, believe it or not, have an interest in what the author is saying.

Another strength of The Carp And The Seagull's control method is that it's not signposted. It is for us to explore and work out for ourselves. I love this approach; I use it a lot in my own projects. Encouraging the user to peek and poke and see-what-it-does can be quite counterintuitive with our grown-up reserve and respect. It's something children do instinctively, before we beat it out of them in our efforts to shape them into adults. It's by tapping into this inner child, the unshielded sensitive lump at our core, that interaction can become an emotional activity.

Adult reserve is not the only barrier. Interaction designers also have to fight decades of ingrained "ways of interacting with computers". The generations who were raised with computers that were expensive and easily broken have made the way we approach them rather anachronistic.

Another recent digital artwork, Kyle MacDonald's People Staring at Computers, exposed this. Kyle wrote a script that made the webcam on his Mac snap his face at intervals while he used his computer. When he looked back over the images he saw the same expression, or rather lack of expression; his "looking at a computer" face.

He repeated the experiment on public computers, rigging display machines in Apple Stores to snap people testing them and upload the photos to a Tumblr (a project that earned him a visit from the FBI). He saw the same expressionlessness on strangers' faces. It's the TV-watching expression, that drone-like state of suggestion that advertisers love. It suggests there is a certain, default mode that we engage when faced with a computer. We might smile at loved ones and cat videos, but the "rest" setting for our interaction is blank-faced and passive.

There is a move to counter this over-serious traditionalism, from the hackers who insist their machines can be toys as well as just work tools. They make much of the importance of playfulness and subversion in their practises. It's a noble cause, but it's a jump to the other extreme. What's missing is a subtle gradient between the two - a range of possible emotional responses.

This is partly why, for so many, it is easy to talk of a dichotomy between technology and emotion. Why it is unimaginable for someone to cry at a website. But I'm going to propose a cure.

It's mostly a matter of semantics.

At this very moment there is an undergraduate somewhere in the world writing a dissertation about the collaboration between art and technology. Apparently it's a hot topic. There is a new trend for programmers starting to call themselves artists (and artists becoming programmers), I know this because I have read twelve articles on the subject this month. It's not bullshit. It's just irrelevant. It's a straw-man argument, based on the misapprehension that "art" and "technology" wouldn't normally "collaborate" in a natural way.

Technology is a means; art is an end product. There is no meaningful dichotomy between the two. Painting is a technology. The pencil is a technology. The harpsichord is a technology. So why do we never hear of painters being asked their opinion on the "interface" between painting and art? It's because there is nothing interesting to say there.

We only call a medium a technology when it is new, when it still has novelty, when it is not widely understood or accepted by the masses. But all mediums start as technologies – drawing, sculpture, photography, cinema; all these were technologies first, mediums later.

The same goes for programming and art. There is nothing unusual in code-based art forms. There may have been twenty years ago, when it was a more arcane skill. Or forty years ago, when it was only practised by mathematicians. But now everyone can do it. My Generative Art book attempted to prove that point. Coding is just another tool in the artist's toolbox. Another form of expression. It is only unusual to those who choose not to partake.

All artists must choose a means of expression, and learning to code is just one of the available options. It's only as hard as learning a musical instrument. It is just about training your brain to think in a certain, hyper-logical way (the way machines think), just as playing guitar is teaching your fingers to fall into certain patterns. As with any other skill, it's something that takes practise. And something that is best learned as young as possible (my seven-year-old, inspired by his dad, is currently teaching himself Scratch). But it is not a novel skill. It's not peculiar. Not any more.

The mediums are fascinating, yes; there is plenty to get excited about, and plenty for the tech bloggers to write about. But for our technologies to become mediums, for them to reach an emotional maturity, we need to get them to a point when we can enjoy them without seeing the mechanism. To squint and see beyond it. Then the weeping can commence.